The rise of artificial intelligence has become omnipresent in recent years, state-of-the-art models are open-sourced on a daily basis and companies are fighting for the best data scientists and machine learning engineers, all with one goal in mind: creating tremendous value by leveraging the power of AI. Sounds great, but reality is harsh as generally only a small percentage of models make it to production and stay there.

What exactly is MLOps?

When it comes to a definition for MLOps, we believe Google’s definition is spot on:

“MLOps is an ML engineering culture and practice that aims at unifying ML system development (Dev) and ML system operation (Ops).” — Google

What is crucial about MLOps is that it is about a culture and practices, similar like the DevOps culture we all know, and not tools. A common mistake is to go directly in the realm of MLOps tools, a world where you can easily get lost. Tools should ultimately support the practices and not the other way around.

Chip Huyen said in her twitter "Machine learning engineering is 10% machine learning and 90% engineering"

Simply put, MLOps is a culture that focuses on automating machine learning workflows throughout the model lifecycle. Without MLOps, it will be a long and bumpy journey to operate models in production.

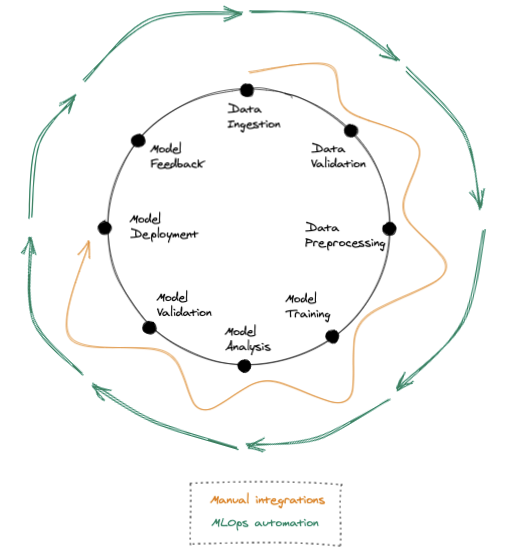

Figure 1: Machine Learning Model lifecycle

Why should we care?

So why is all of this so important? What is a model worth if it cannot be reliably deployed and supported in production for the intended usage? That’s right, models only create value once they’re in production. Enter MLOps. MLOps ultimately drives business value.

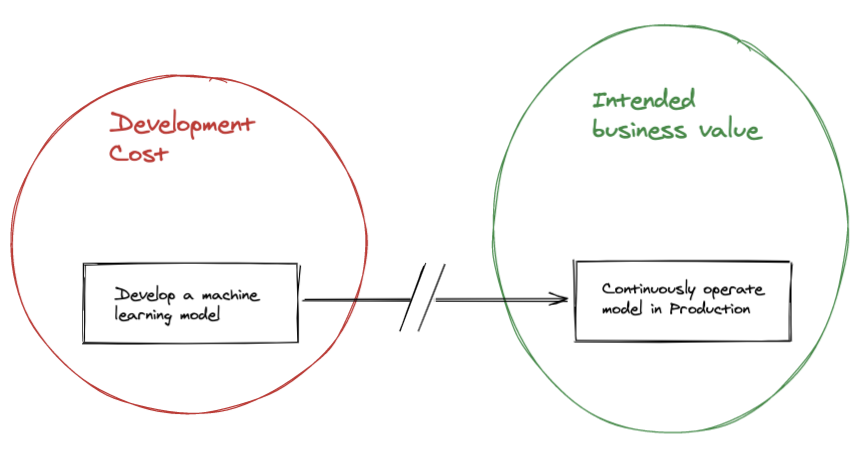

The figure below illustrates the skew between where the intended business value is obtained versus where the majority of the initial development cost is made. If you don’t invest in bridging the gap to successfully deploy and operate models in production, you will probably be left behind disillusioned.

Gap between unlocking business value and initial development cost

“Data scientists can implement and train an ML model with predictive performance on an offline holdout dataset, given relevant training data for their use case. However, the real challenge isn’t building an ML model, the challenge is building an integrated ML system and to continuously operate it in production.”

There are a lot of things that can go wrong when putting a model into production, and trying to keep it there. A typical example is that the preprocessing during training is no longer in sync with preprocessing in production and therefore the model is generating useless predictions. Another one is data skew, meaning that data fed to the model in production is different from the data distribution during training and therefore generating unexpected predictions.

Next to enabling the intended business value of the model, there are some key benefits related to MLOps:

- Prevention of bugs

- Audit trail

- Standardization

- Ability to focus on new models, not maintaining existing

- Happier data scientists and machine learning engineers

It is clear that MLOps brings indispensable value to the table, at the cost of bootstrapping your team with MLOps practices and supportive tooling.

So What is Best Pratices we can get from pioneer practitioner?

- Modularize code into logical machine learning related steps

In order to be able to build a range of simple to complex machine learning workflows, it is key that your code is modularized according to logical machine learning related steps. Consider it as constructing the small lego blocks that allow you to create simple but also very complex structures.

A typical separation of logical machine learning related steps can be seen from Figure 1. As indicated in that figure, you do not want to take the manual integrations approach, where typically cluttered python scripts are stitched together with bash scripts.

- Containerize code after experimentation phase

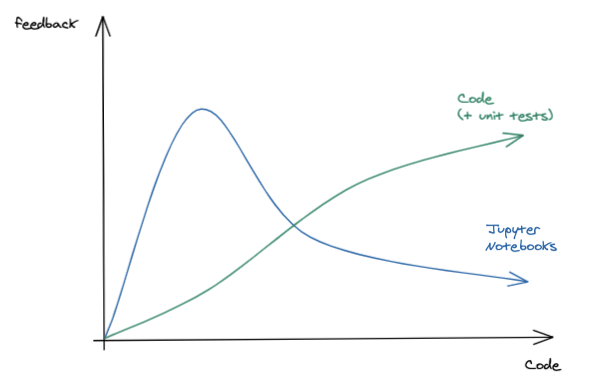

Notebooks are great to quickly experiment and get feedback fast in an early stage. But after the initial exploratory phase, the experiments that return a positive result deserve to be industrialized by applying engineering best practices. Just like building a car prototype is a totally different thing than manufacturing an actual car.

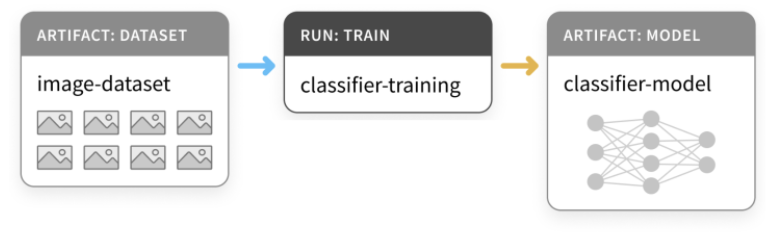

- Version control data and models

Machine learning systems need to deal with extra layers of complexity, being the models and the data on which the model is trained on, compared to traditional software. It is key to version control your data and models for reproducibility and audit purposes.

4.Mixed, autonomous teams

The lifecycle of a machine learning model is so complex that it is very unlikely that it will be properly managed if the ownership is changed throughout the lifecycle. Throwing a model over the wall from the data science team to the operations team is typically a recipe for disaster. The operations team does not have the machine learning context that for example data can drift over time, meaning the model response API status code indicates a green traffic light but the actual prediction in the model response might deviate from its intended goal. Who is to blame?

Mixed, autonomous teams ensure overall coverage during the lifecycle of a machine learning model.

- Peer reviews, peer reviews and more peer reviews

Remember that as a developer, you typically read way more code than you write code. Peer reviews ensure consistent quality and most importantly checks for readability and common understanding of the code amongst the team members.

Of course not every member of the team needs to review every single pull request. Typically you tag some team members that either need to be aware of the content of the pull request or that are most knowledgeable on the content related to the pull request. Pull requests are an important way to communicate to team members on the progress of the feature or project.

SUMMARY

In this post we have explain the why MLOps is important and shared some best practices.The key takeaways should be:

MLOps is a culture that focuses on automating ML workflows throughout the model lifecycle

MLOps powers standardization, prevention of bugs, audit trail and the ability to focus on new models rather than maintaining existing ones

MLOps is an indispensable enabler for organisations to adopt ML

MLOps requires an initial investment that definitely pays off

MLOps is about practices, and tools should support those